Augmented Reality Guidance for Robotic Surgery

|

|

| Segmented MRI image |

Rendering of the pelvis |

|

|

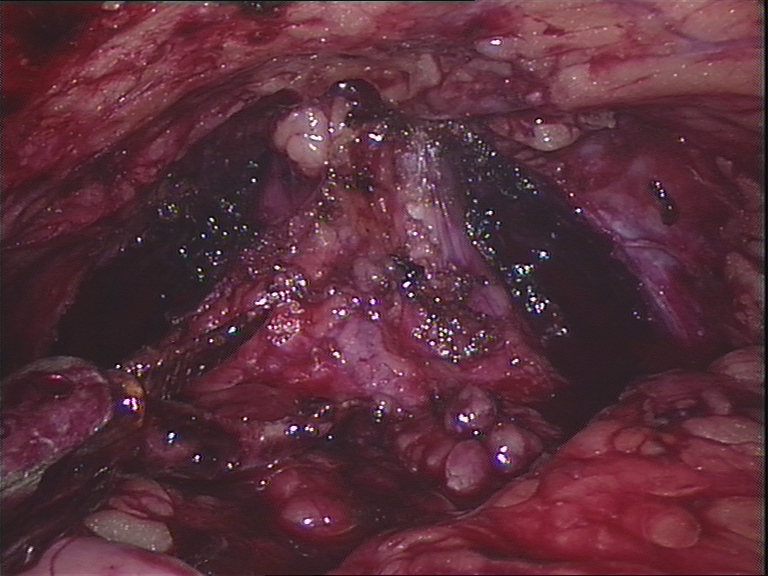

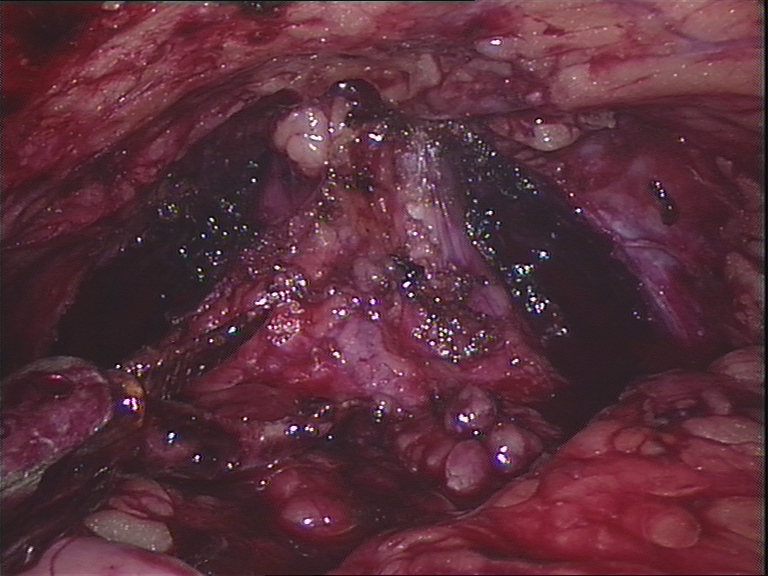

| Image through the endoscope |

Combined real and virtual images

|

Background

Image-guided surgery aims to use images taken before a procedure to

help the surgeon to navigate. In augmented reality (AR) the guidance

is provided directly on the surgeon's view of the patient by mixing of

real and virtual images (see above). Technical tasks include

calibration and tracking of devices relative to the patient,

registration (or alignment) of the preoperative model to the patient

and suitable rendering and visualisation of the preoperative data.

Project Description

This project aims to provide AR guidance for robotic surgical

removal of the prostate. The surgery is performed using the da

Vinci robot, which is controlled by the surgeon who works on a

master console. The display is in stereo, providing 3D

visualisation. Stereo AR overlays should give the impression of

virtual structures sitting beneath the surface as though the

tissue were transparent.

There are a number of technical aspects to this work that could be

examined during this project.

Calibration

The stereo da Vinci endoscope has certain properties that make

it's calibration difficult. There is significant distortion and

the baseline of the two images (distance between the two cameras)

is small. This makes for a difficult calibration for stereo

reconstruction. Work at Imperial (see Danail Stoyanov's

work on calibration) and elsewhere (see

Christian Wengert's calibration) amongst others have examined

the problem of endoscope calibration.

The purpose of this part of the project will be to examine the

accuracy of different existing calibration techniques and to

characterise the calibration parameters (including distortion) and

any variation there may be with changing focus.

This part of the project is related to the

project on camera calibration but focuses on specific issues

of endoscope calibration and 3D stereo reconstruction under these

conditions.

Patient Model Construction

To produce a model of the patient from preoperative imaging the

relevant organs need to be identified in the images. This can be a

laborious process if done manually. There are a number of

algorithms for automated or semi-automated segmentation of medical

images implemented as part of the

Insight Toolkit . This part of the project will implement

and compare different the results from techniques. A fully

automated method involving registration with a template from the

visible human will also be implemented. Various metrics will need

to be used to assess the results, including surface point distance

statistics, volume of overlap etc.

Registration

The registration from the 3D preoperative view to the stereo video

of the da Vinci robot can be achieved in a number of ways. If

corresponding features can be identified in the calibrated left

and right images then their position in 3D can be

reconstructed. This can then be aligned to the corresponding

surface from the preoperative model. Alternatively a rendering of

the preoperative model can be matched to the two view using

intensity-based metrics such as mutual information and

photoconsistency. This area of work will involve implementation

and evaluation of 2D to 3D registration optimisation from a

preoperative model to video images of the patient at various

stages of the operation.

Visualisation

Visualisation is achieved by mixing of real video and virtual

images. The intention is that the stereo overlays will enable

perception of structures sitting beneath the viewed surface as

though the tissue is transparent (i.e. x-ray eyes). However,

perception can be difficult since there are many visual cues other

than stereo. There are a number of visual parameters that can be

varied and tricks that can be employed, including varying the

mixing level, overlay of visible edges and moving textured

rendering, with the aim of achieving correct perception. This

project will look at these techniques and design experiments to

test depth perception in the lab as well as in the operating

theatre. This will involve interfacing with an external tracking

device as well as implementation of numerous graphical display

techniques to perform these experiments and hopefully achieve

accurate perception.

The details of the project can be somewhat tailored to fit around

the skills and desires of the student, who should be a highly

competent programmer and mathematician able to work as part of a

team. One of the above areas should be selected.

Eddie Edwards

Last modified: Thu Oct 18 15:02:06 BST 2007