What are Neural Networks?

"A man-made simulation of a system of neurons."

Why Neural Networks?

"There exist problems that are too difficult to formulate into algorithms."

There exist problems that are too difficult to formulate into algorithms, especially when they involve subtleties, and thus we will need another method to deal with it and to generalize patterns (2). In these cases, we use a neural network which is capable of “learning. This idea allows us to construct a solution which is able to enhance its performance over time given a reasonable amount of data. This will also lead to a more consistent and predictable result from a certain set of input data. However, neural networks are not a perfect solution. A neural network can experience some degree of fault (2) due to external influences and the noise of input data, though it generally leads towards an acceptable solution of some complicated problems.

Restricted Boltzmann Machines

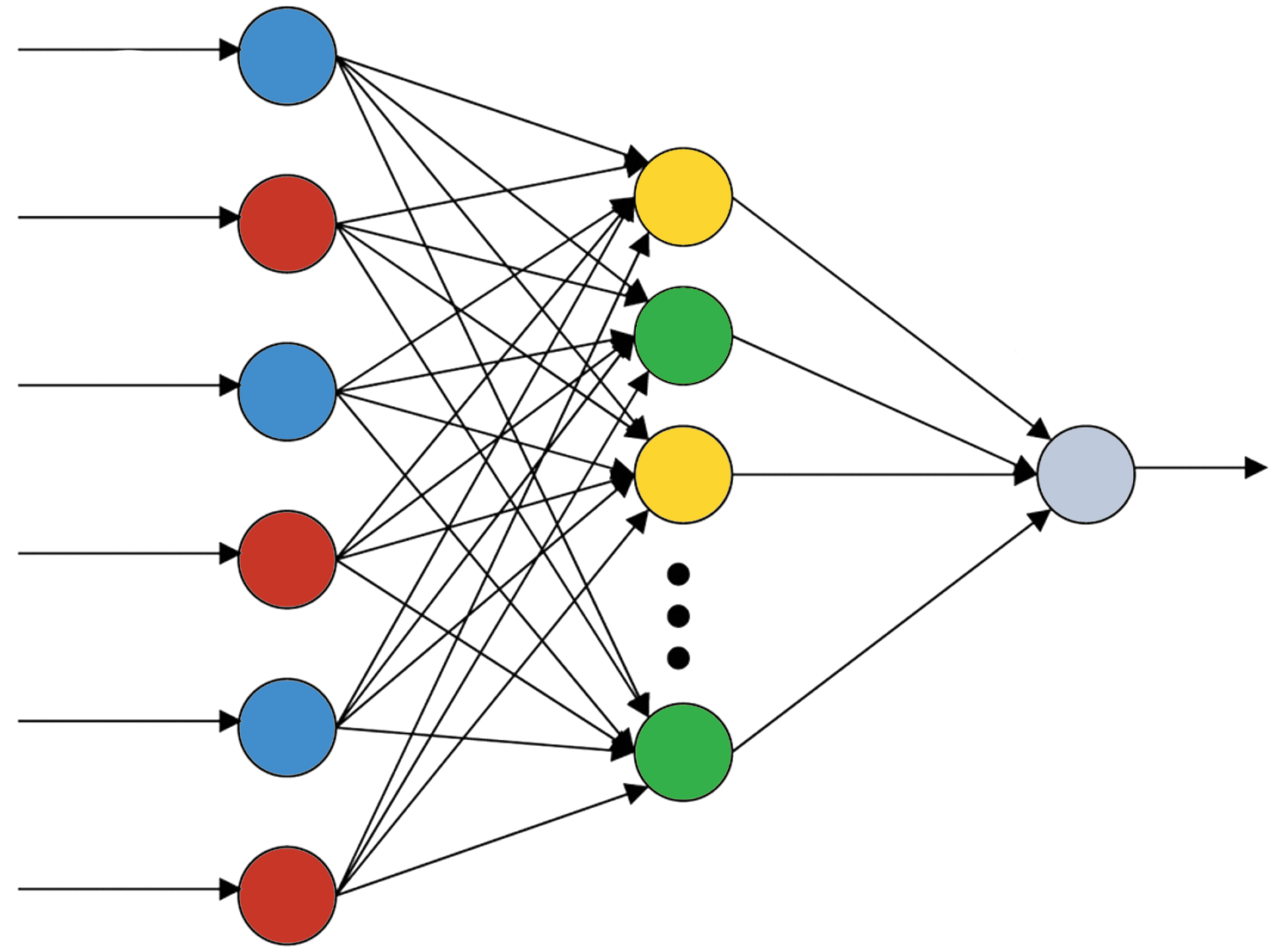

Restricted Boltzmann Machines (RBMs) are a distinct type of neural network created by Geoff Hinton (3). RBMs are structurally simple and contain two layers, the visible and the hidden layer. It is “symmetrically connected”, with “neuron-like units” that make randomly determined (stochastic) decisions to be triggered on or off (4). In its essence, it is structured like a complete bipartite graph (5). This means that there is no intra-layer interaction and every node from the visible layer is connected to every node from the hidden layer and vice versa.

RBMs can essentially solve two “computationally different” problems, learning and searching (4). This will be analysed in depth in the coming sections. RBMs are useful in fields such as “dimensionality reduction, classification, regression, collaborative filtering, feature learning and topic modeling” (3).

Deep Belief Nets

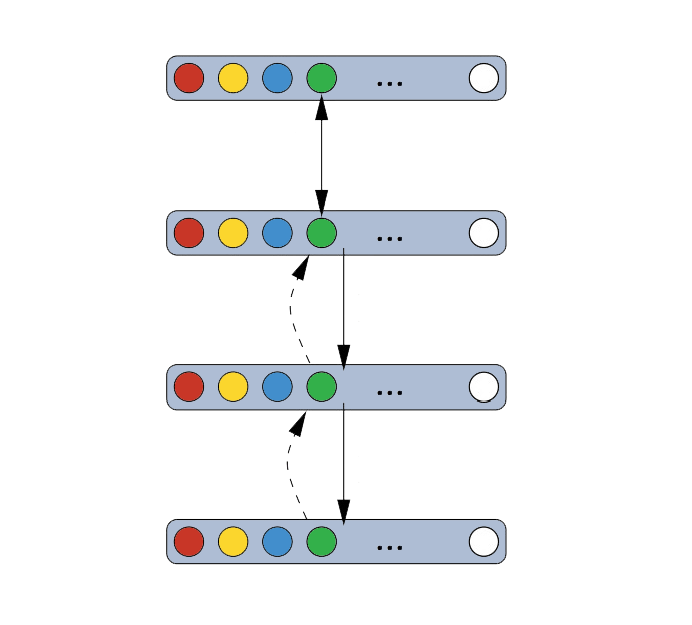

Deep Belief Nets (DBNs) are a logical extension of RBMs. They are simply a stack of RBMs in which “each layer communicates with both the previous and subsequent layers” while still maintaining no intra-layer communication. The top two layers in a DBN have “undirected, symmetric connections between them and form an associative memory”. DBNs are more efficient than the bipartite RBM as the addition of the several layers enables a deeper learning as the name suggests(6,7).

How Does it all Work?

Now that we are aware of what these concepts are, let us look at the fine details of these systems.