Benchmarks for Event-driven SJ Sessions

Section 5 of the paper presented preliminary performance results comparing a simple multithreaded SJ implementation of a simple SMTP server against an event-driven version, implemented using the new SJSelector API. This online appendix presents the extended micro and macro benchmarks and full details omitted from the above paper, with the results obtained for the latest version of SJ. See below for the full micro and macro benchmark source code and scripts. The raw results from each benchmark can also be found below.

-

(See here for benchmarks that compare SJ performance against the standard java.net.* Socket API and Java RMI.)

-

Back to the main page.

Benchmark Environment

To simulate large numbers of concurrently active clients, all of the benchmarks were executed in the following cluster environment: each node is a Sun Fire x4100 with two 64-bit Opteron 275 processors at 2 GHz and 8 GB of RAM, running 64-bit Mandrakelinux 10.2 (kernel 2.6.11) and connected via gigabit Ethernet. Latency between each node was measured to be 0.5 ms on average (ping 64 bytes). The benchmark applications were compiled and executed using the Sun Java SE compiler and Runtime versions 1.6.0. Each experiment features a single Server and a single Timer Client (where needed) running by themselves in separate nodes (Servers are bound to a single core); the Load Clients are distributed evenly across the remaining nodes of the cluster. For each parameter configuration in each experiment, we discarded a fixed number of warm-up runs before the results were recorded; the following presents the mean values.

We give the complete results for the following micro and macro benchmarks.

-

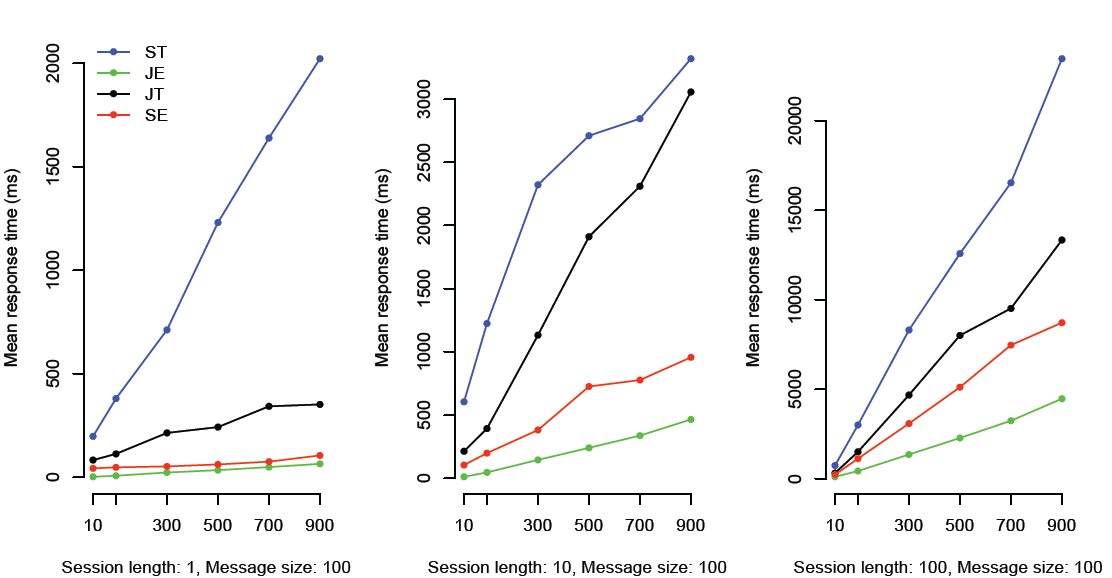

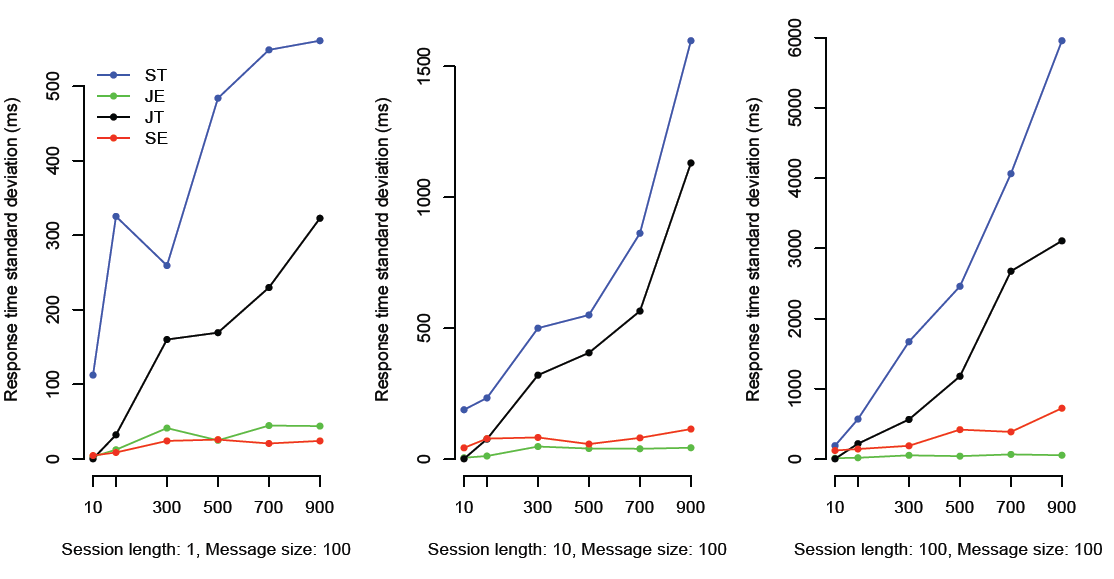

Micro Benchmark 1. Server Response Time: this micro benchmark measures the time taken to complete a simple Client session whilst the Server is under load from a varied number of other concurrent Clients.

-

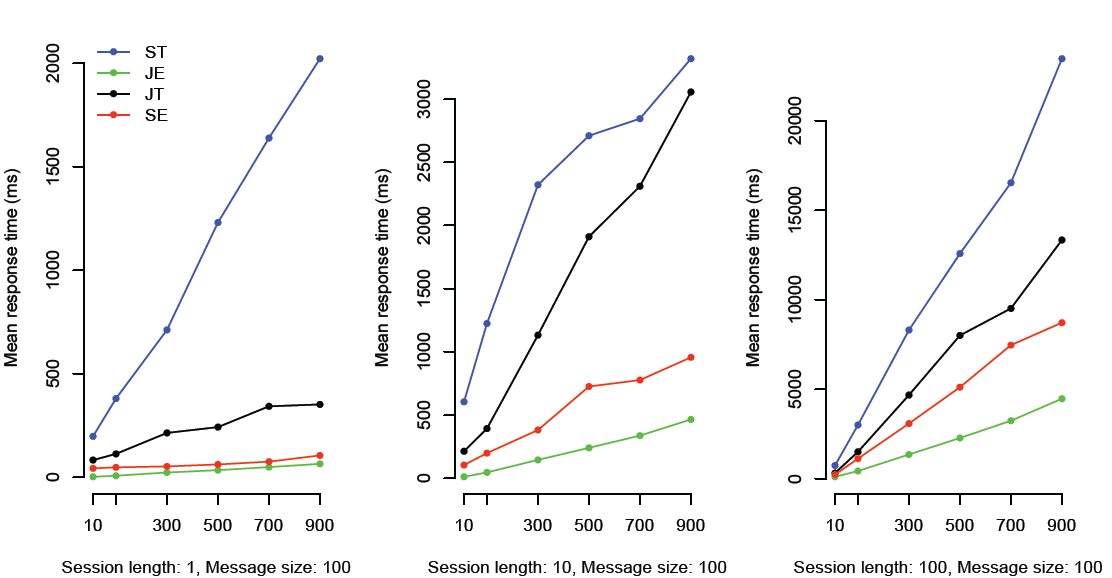

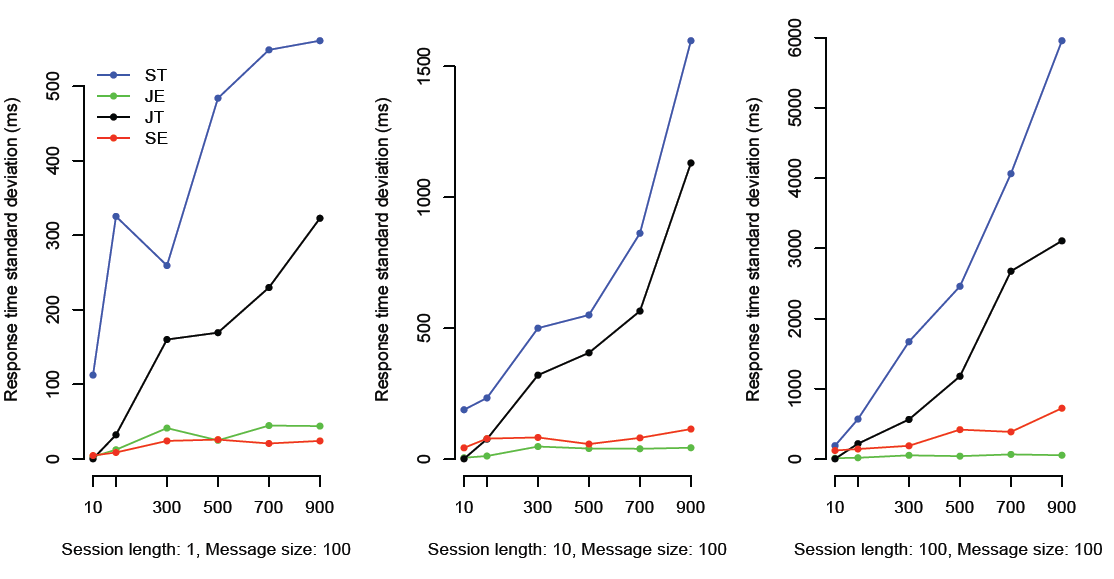

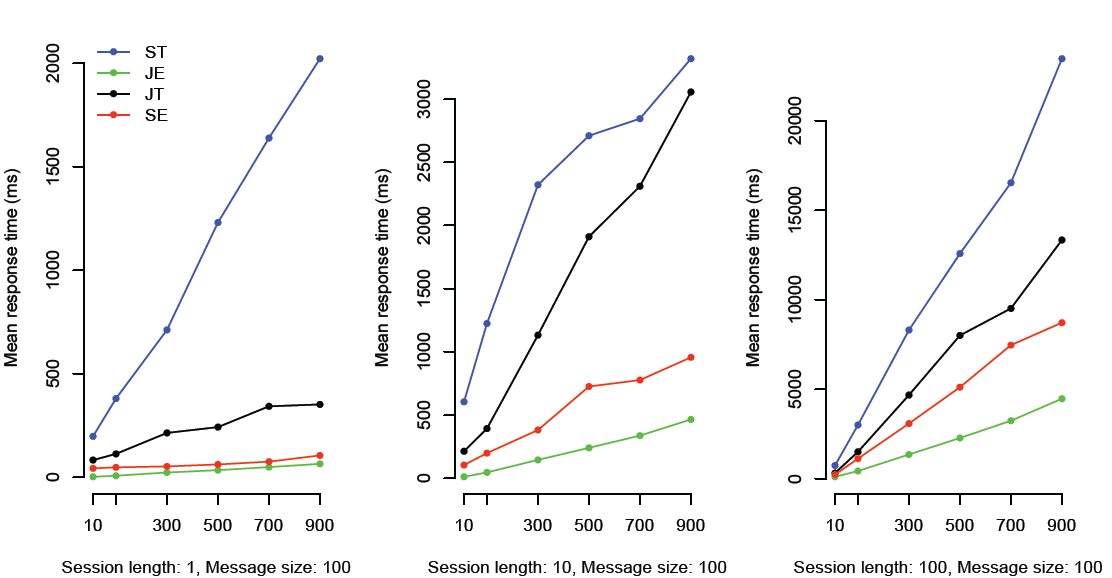

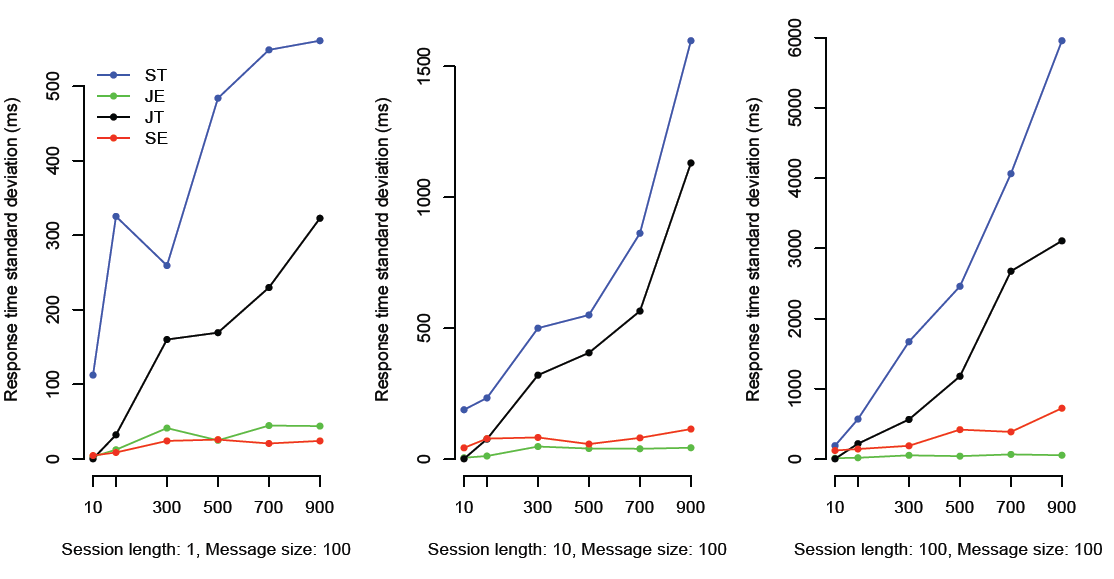

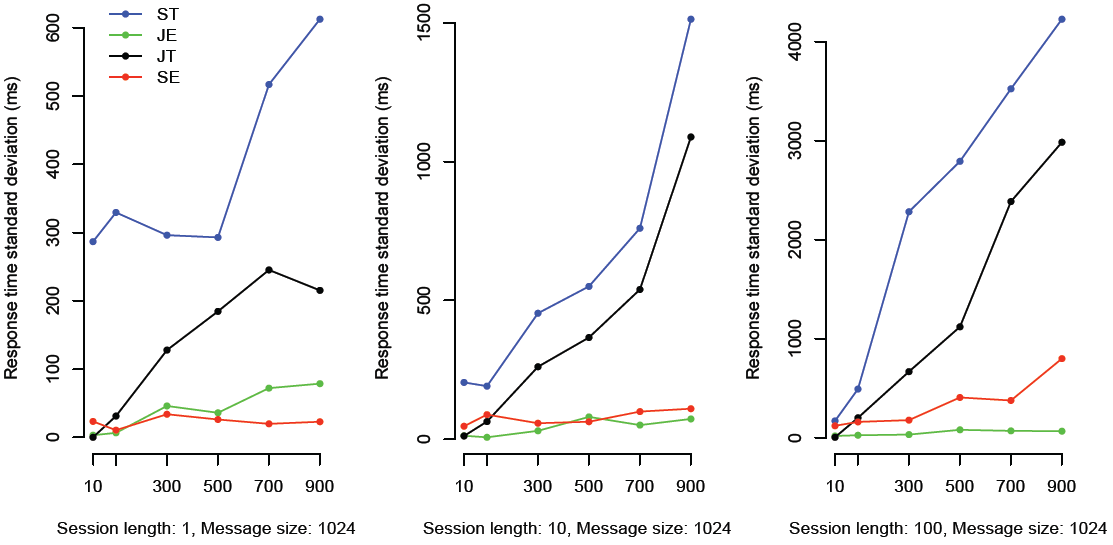

1-1. Mean response time and response time variance for message size 100 Bytes.

-

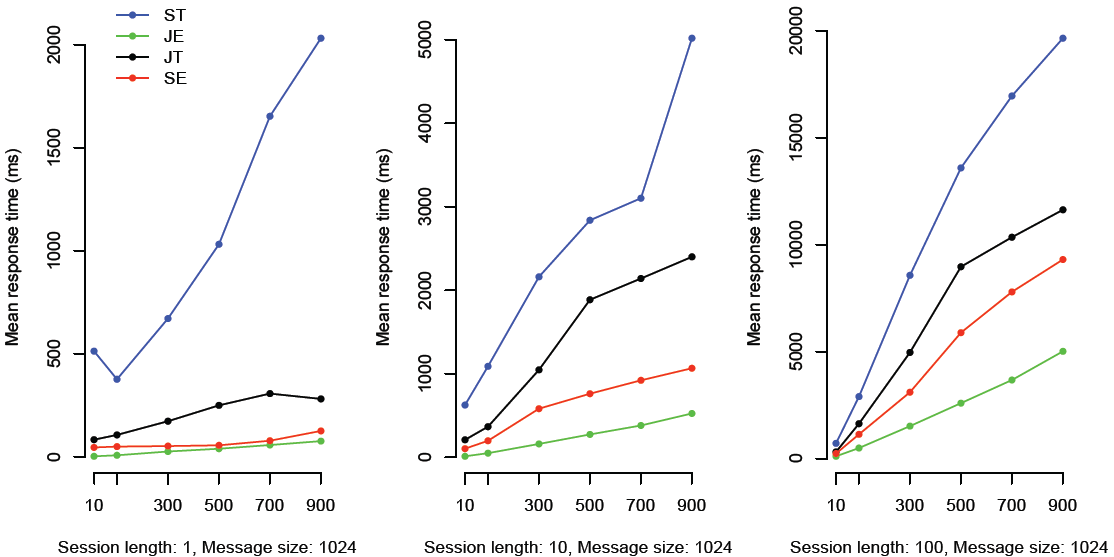

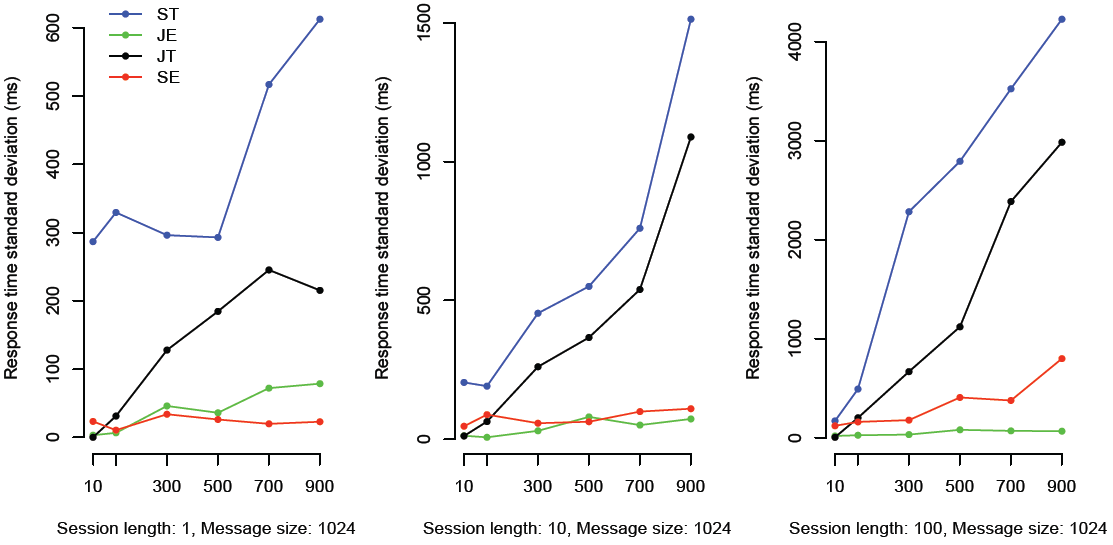

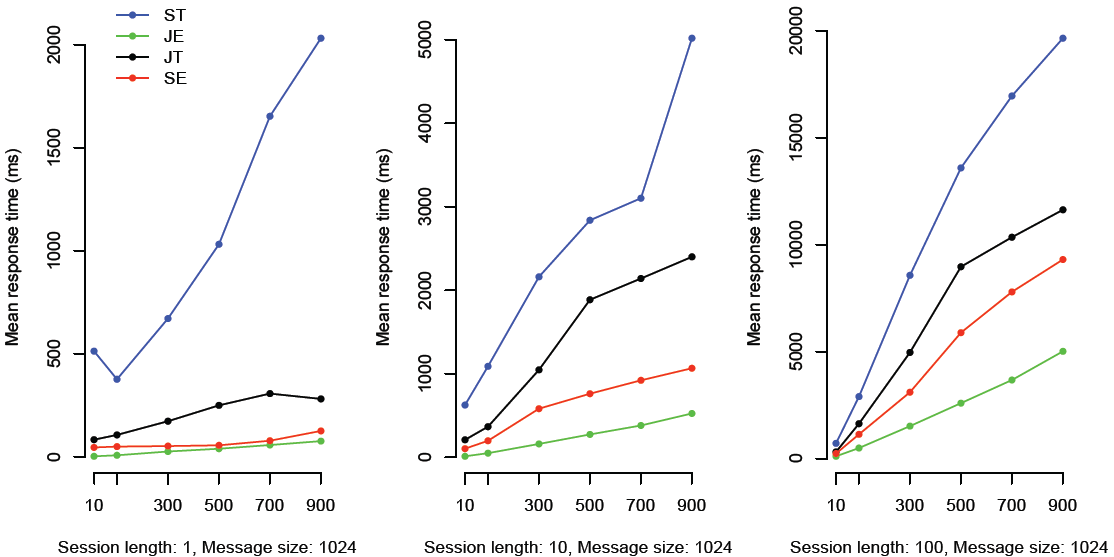

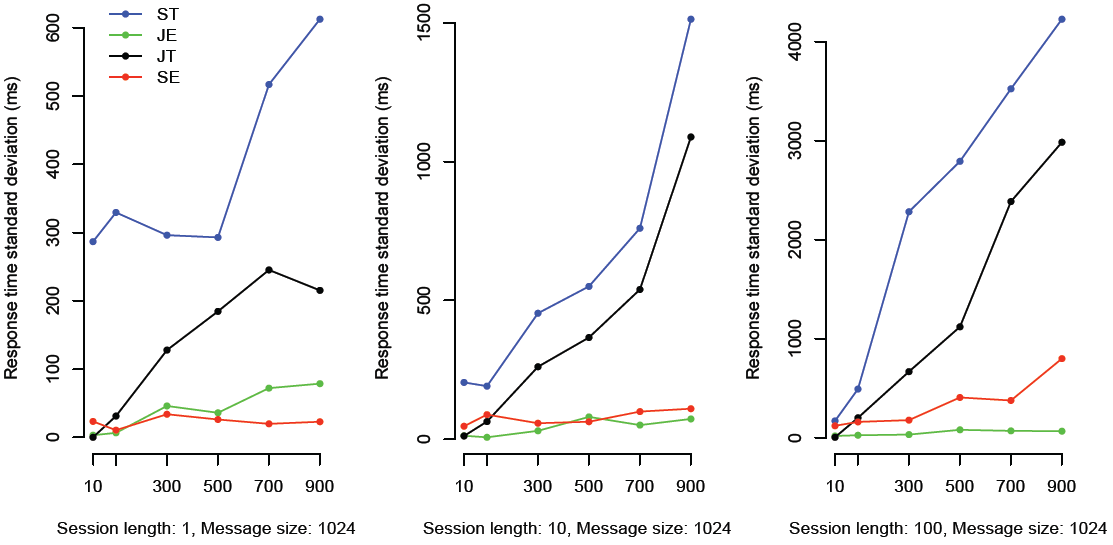

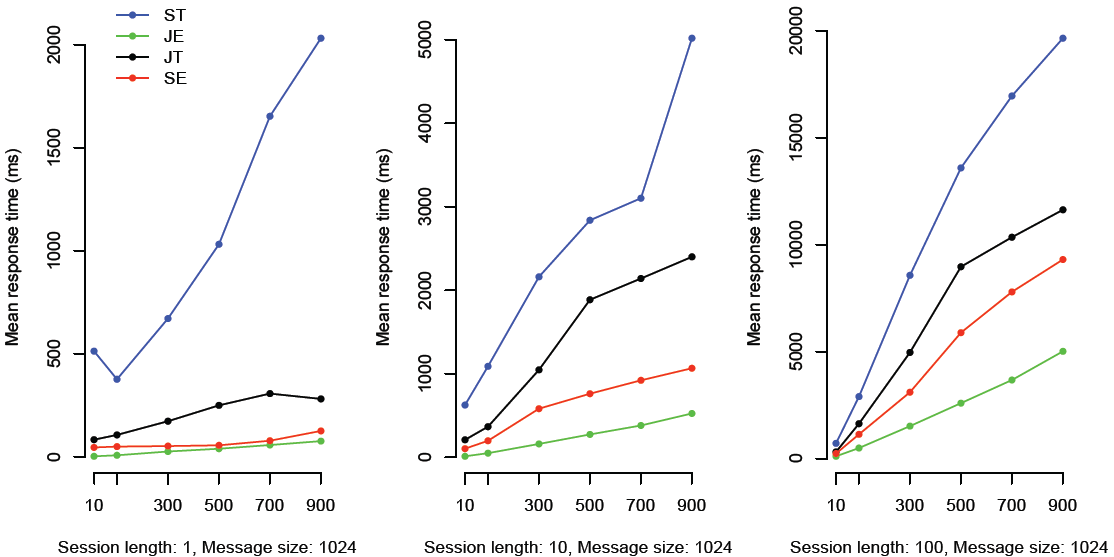

1-2. Mean response time and response time variance for message size 1 KB.

-

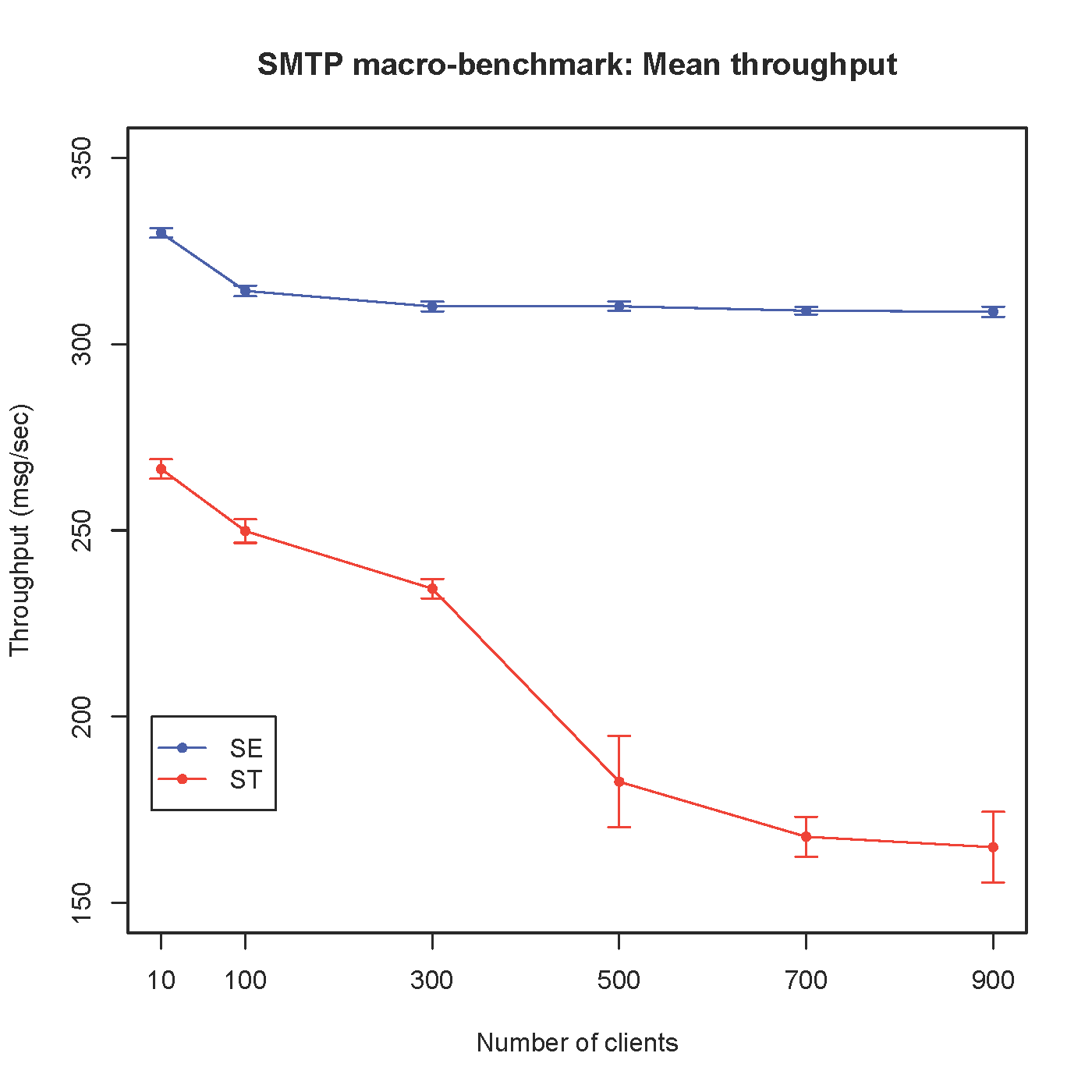

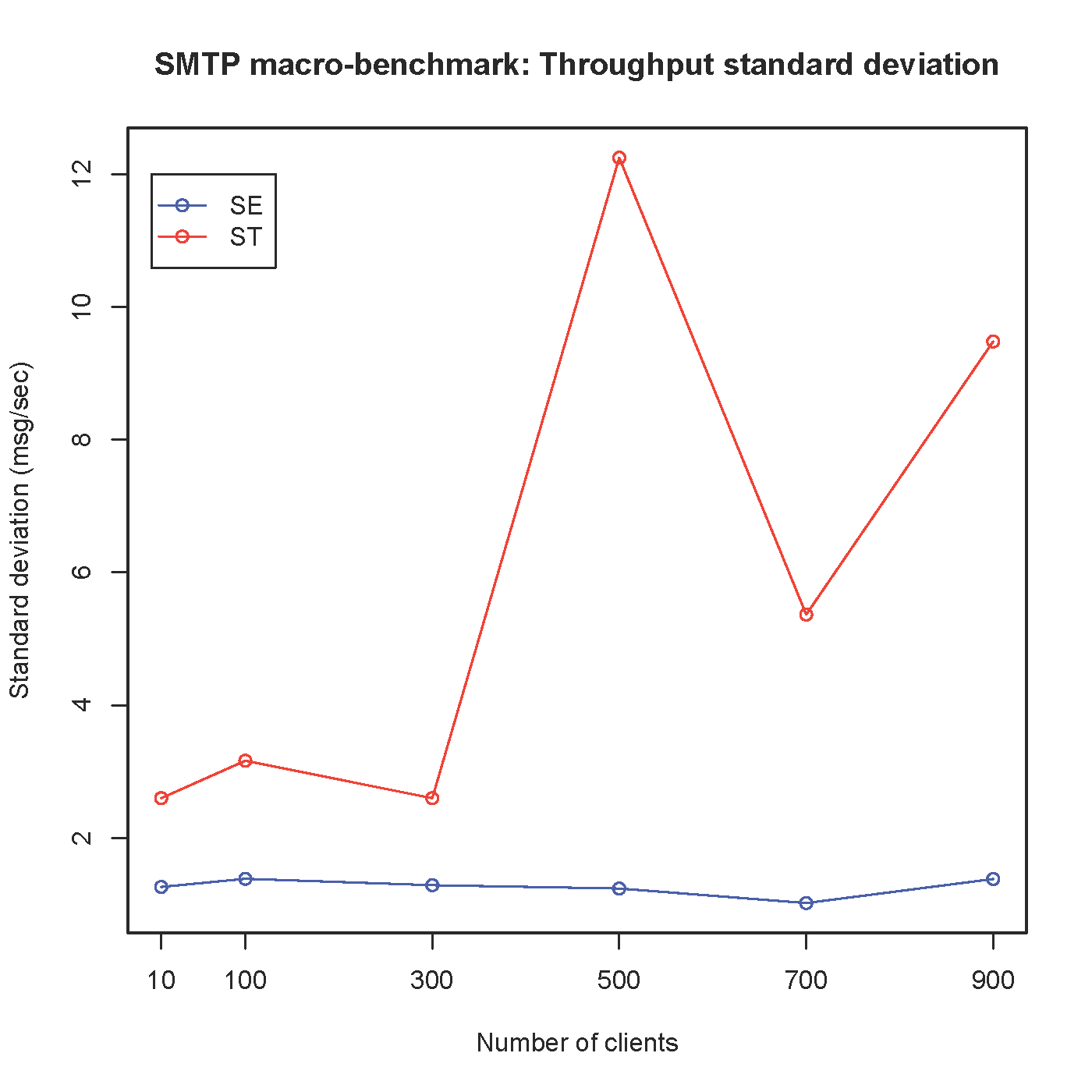

Macro Benchmark 1. SMTP Throughput: this macro benchmark measures the number of e-mail messages successfully processed by the SMTP Server whilst under load from a varied number of concurrent Clients.

-

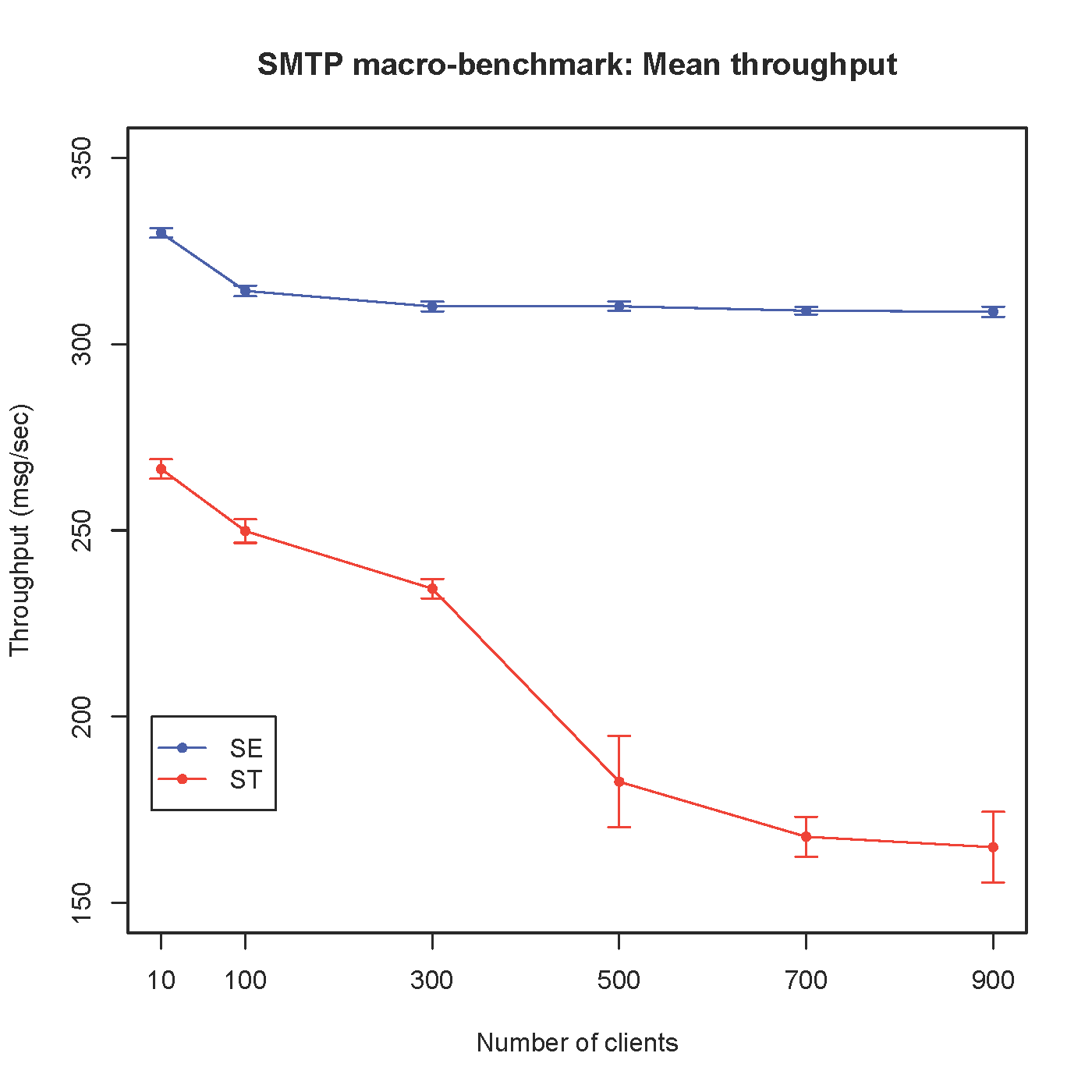

1-1. Mean throughput and standard deviation.

-

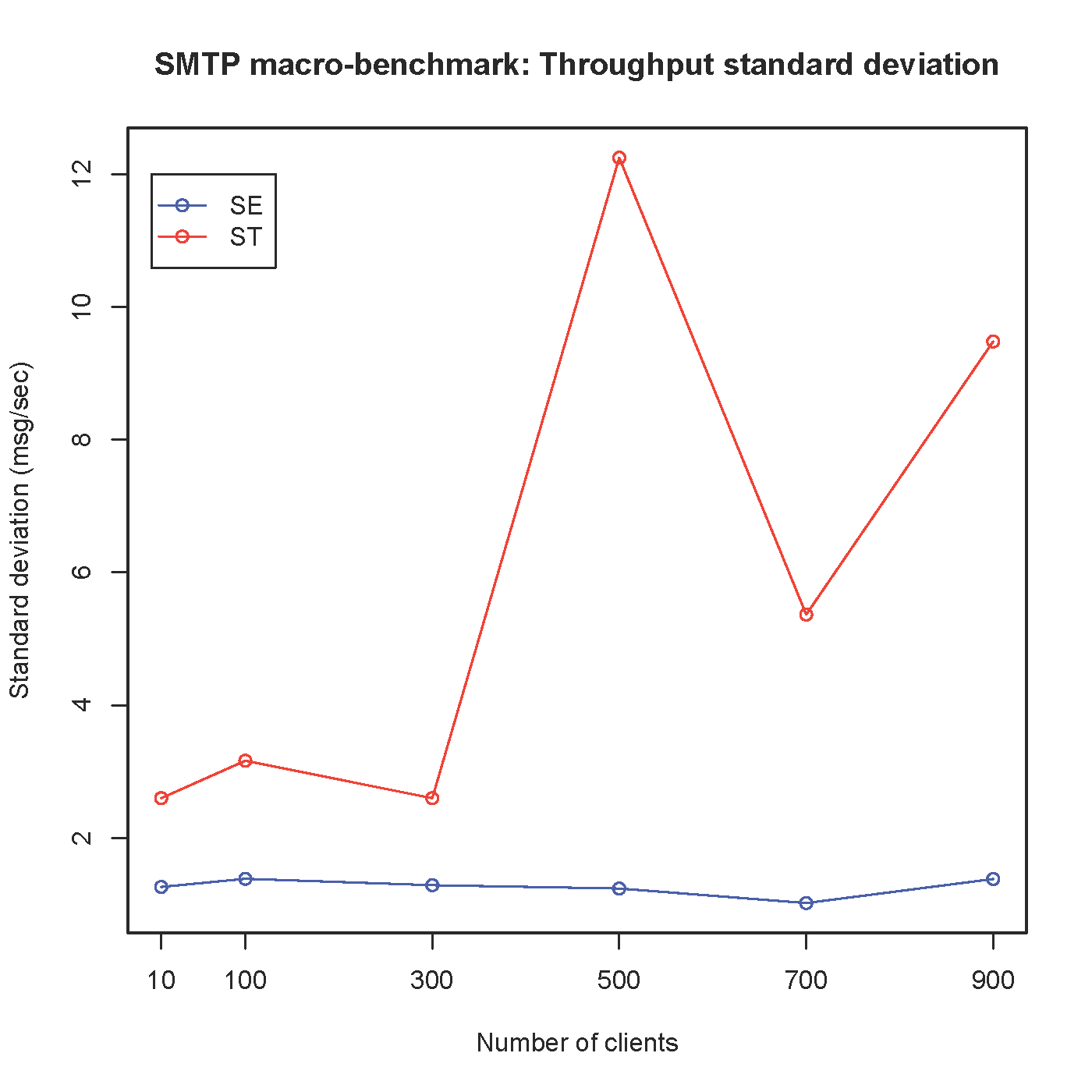

1-2. Throughput variance.

The following abbreviations are used for each benchmark application version:

-

JT: Java multiThreaded implementation.

-

JE: Java Event-driven implementation.

-

ST: SJ multiThreaded implementation.

-

SE: SJ Event-driven implementation.

In this benchmark, all Load Clients engange in non-terminating, i.e. repeatedly looping, microbenchmark sessions with the Server, sending fixed messages of size: (a) 100 Bytes and (b) 1 KB. The time taken to complete a session with the Server is measured using a Timer Client whilst the Server is under load from 10, 100, 300, 500, 700 and 900 Load Clients. 100 measurements, i.e. 100 Timer Client sessions, were taken for each Server instance, and the entire experiment was repeated 10 times.

The raw results from this benchmark are given below.

Micro 1-1. Mean response time and response time standard deviation (millis) of the JT vs. JE vs. ST vs. SE microbenchmark Servers for message size 100 Bytes.

Micro 1-2. Mean response time and response time standard deviation (millis) of the JT vs. JE vs. ST vs. SE microbenchmark Servers for message size 1 KB.

The complete results from Micro Benchmark 1 can be downloaded from here: zip. This includes the raw data output (.txt) by the benchmark applications, a parser for the raw results (awk script), and the parsed results (.csv).

Format of the raw data. Each data file starts by listing the global benchmark configuration parameters. Then each set of results for each parameter configuration is headed by e.g. Parameters: version=JE, clients=10, size=100, length=1, trial=0 where version specifies the running application, clients the number of Load Clients connected to the Server, size the size of the messages being communicated between the Server and Clients, and trial the experiment "repetition" number (i.e. 0 to 9). A single set of results corresponding to one experiment run spans 3 × 100 lines of data: each set of three lines gives the time (nanos) to complete session initiation, the main session body, and session close for one run of the experiment.

Back to the top (for the benchmark environment description) or here for the raw data files for this benchmark).

In this benchmark, all Load Clients engange in non-terminating, i.e. repeatedly looping, SMTP sessions with the Server, sending fixed messages of size 1 KB. No Timer Clients are involved. The throughput at the Server was measured within a series of 100 "windows" of length 15 millis, under load from 10, 100, 300, 500, 700 and 900 Load Clients. The entire experiment was repeated 10 times.

The raw results from this benchmark are given below.

Macro 1-1. Mean throughput performance and standard deviations (messages handled per second) of the multithreaded SJ SMTP Server against the event-driven SJ SMTP Server.

Macro 1-2. Standard deviation in the throughput performance (messages handled per second) of the multithreaded and event-driven SJ SMTP Servers.

The complete results from Macro Benchmark 1 can be downloaded from here: tar.gz. This includes the raw data output (.txt) by the benchmark applications, a parser for the raw results (awk script), and the parsed results (.csv).

Format of the raw data. Each data file starts by listing the global benchmark configuration parameters. Then each set of results for each parameter configuration is headed by e.g. Parameters: version=SE, size=1024, trial=0 where version specifies the running application, size the size of the e-mail messages being submitted to the Server, and trial the experiment "repetition" number (i.e. 0 to 9). A single set of results corresponding to one experiment run spans 2 × 100 lines of data: each pair of lines gives the length of a throughput window (nanos) followed by the number of messages handled by the Server in that window.

Back to the top (for the benchmark environment description) or here for the raw data files for this benchmark).

Benchmark Source Code

For the complete benchmark source code and execution scripts:

The benchmark scripts are written in Python. The macro benchmarks presented in this online appendix use the same SJ SMTP server implementations as described in the above paper.

Back to the top or to the main page.