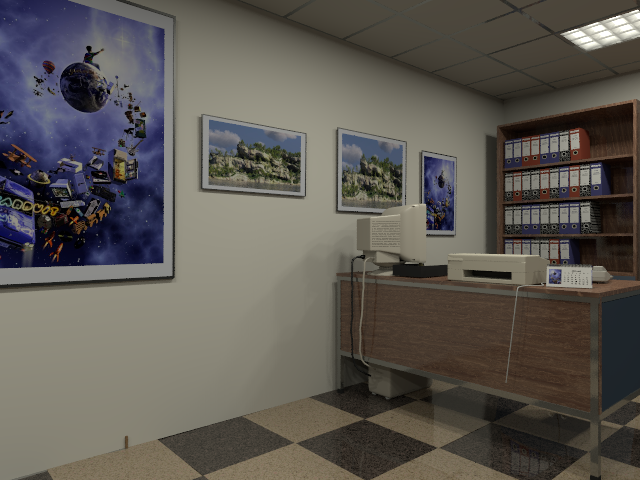

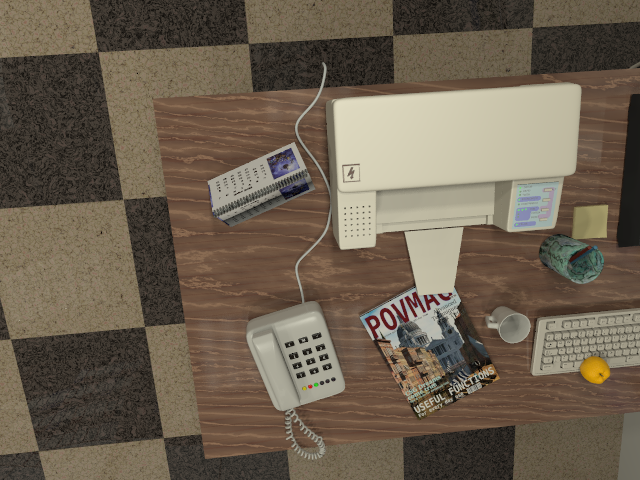

The download section comprises of two sub-sections; one that contains perfect ray-traced images given by POVRay and other that has photo-realistic images with motion blur and camera noise added using the parameters obtained from our Basler camera. Perfect ray-traced images could be useful at other places too e.g. doing dense 3D reconstruction apart from pure 6DoF camera tracking without any camera artifacts in images. In the following, all Perfect Ray-traced Images are 16bit and stored in PNG format. All frame-rates are interpolated on a 5 second long trajectory. Please note that although this website right now only contains one Trajectory, we'd have a set of fresh trajectories added to this dataset to ensure that there is wide range of dynamics covered. Also, we'd soon add images from a different scenes too. We used a cluster of machines in our department via popular batch processing High-Throughput Computing sytem condor to render these images. We'd also release our depth-map patch for POVRay so that you can render sequences for your trajectory only to make sure that you don't get to work only on our trajectories.

Few Pointers For Data Format And Usage

1. The Uncompressed RAW format has the depth files stored as double array and there is separate camera file for every frame. The Depth map provided in this format is actually the Euclidean Distance of camera from a point in the scene. You will need to refer to our Ground Truth Data Parsing (Source Code) Section for appropriate MATLAB or C++ files to parse the data.

| Perfect Ray-Traced Images: Fast Motion |

| Photo-realistic Images (With Motion Blur And Noise): Fast Motion |

| Perfect Ray-Traced Images: Slow Motion |

Notable Points

1. Our Motion blur generation process involves rendering more samples of images around a given time-stamp and using the Camera Reponse Function (CRF) obtained (of our Basler Camera) we simply average the Irradiance returned by POVRay and push it through the CRF to obtain the corresponding brightness.

2. We also obtain the Noise Level Functions (NLF) of our Basler camera and use it to add the noise in the images. We add noise to the images first before averaging to obtain the motion blurred image.

Follwing code shows how we add noise to our Irradiance image:

void AddGaussianNoise(CVD::Rgb< byte >& a,

float sigma_red_s,float sigma_red_c,

float sigma_green_s,float sigma_green_c,

float sigma_blue_s,float sigma_blue_c,

bool noise_flag, bool calc_irr_only)

{

static boost::mt19937 generator_red(static_cast< double > (std::time(0)));

static boost::mt19937 generator_green(static_cast< double > (std::time(0)));

static boost::mt19937 generator_blue(static_cast< double > (std::time(0)));

double red_val = (double)a.red/255.0;

double green_val = (double)a.green/255.0;

double blue_val = (double)a.blue/255.0;

assert(red_val>=0 && green_val>=0 && blue_val >= 0);

double irr_red = spline1dcalc(interpolateIrrgivenbri_r,red_val);

double irr_green = spline1dcalc(interpolateIrrgivenbri_g,green_val);

double irr_blue = spline1dcalc(interpolateIrrgivenbri_b,blue_val);

if ( noise_flag )

{

boost::normal_distribution< double > normal_r(0.0, sigma_red_s*sqrt(irr_red));

boost::normal_distribution< double > normal_g(0.0, sigma_green_s*sqrt(irr_green));

boost::normal_distribution< double > normal_b(0.0, sigma_blue_s*sqrt(irr_blue));

boost::normal_distribution normal_camera_noise_red(0.0,sigma_red_c);

boost::normal_distribution normal_camera_noise_green(0.0,sigma_green_c);

boost::normal_distribution normal_camera_noise_blue(0.0,sigma_blue_c);

irr_red = irr_red + normal_r(generator_red) + normal_camera_noise_red(generator_red);

irr_green = irr_green + normal_g(generator_green) + normal_camera_noise_green(generator_green);

irr_blue = irr_blue + normal_b(generator_blue) + normal_camera_noise_blue(generator_blue);

}

irr_red = min(1.0,max(0.0,irr_red));

irr_green = min(1.0,max(0.0,irr_green));

irr_blue = min(1.0,max(0.0,irr_blue));

double bri_red = spline1dcalc(interpolateBrigivenirr_r,irr_red);

double bri_green = spline1dcalc(interpolateBrigivenirr_g,irr_green);

double bri_blue = spline1dcalc(interpolateBrigivenirr_b,irr_blue);

bri_red = min(1.0,max(0.0,bri_red));

bri_green = min(1.0,max(0.0,bri_green));

bri_blue = min(1.0,max(0.0,bri_blue));

if ( !calc_irr_only )

{

a.red = (unsigned char)(bri_red*255.0);

a.green = (unsigned char)(bri_green*255.0);

a.blue = (unsigned char)(bri_blue*255.0);

}

else

{

a.red = (unsigned char)irr_red;

a.green = (unsigned char)irr_green;

a.blue = (unsigned char)irr_blue;

}

}

| Nomenclature of Images and Rendering Commands |

The sequence contains different frame-rates interpolated on same trajectory. Directories containing perfect ray-traced images have file names that look something like this scene_02_0032.png where the 02 denotes the blur sample number and 0032 denotes the image number in the sequence for that frame-rate. We would like to point out that some images among different frame-rates are same. This is due to the common shutter time instances these frame-rates share as a result of time sampling. Therefore, we do not need to render the duplicates. Although we are providing all the perfect ray-traced data files, you do not need to store the duplicates. You can create symbolic links and therefore save memory (sometimes half). Likewise with the depth files and camera pose file.

Just to show you that our Rendering files for various different frame-rates look something like the following

| Ground Truth Data Parsing (Source Code) |

The parsing of the ground truth data can be done with our MATLAB files. We provide the MATLAB files, C++ as well as python codes. Jürgen Sturm visited us and suggested a similar format of ground truth as his, so we plan to make the ground truth format similar to Jürgen Sturm's dataset's. Our MATLAB files are given below:

Parsing Ground Truth Files MATLAB Source Code!

Instructions to use the MATLAB files

getcamK.m file reads the camera file (e.g. scene_00_0231.txt) and gives out the K matrix.

computeRT.m file accepts again the camera file and gives the camera pose [R | t]. The poses are represented with respect to POVRay world frame of reference, i.e. Tpov_cam and it reads as pose of camera with respect to POVRay world.

compute3Dpositions.m file accepts camera file as well as the depth file (e.g. scene_00_0231.depth) and gives the 3D positions in camera reference frame.

computeMotionField.m needs the 3D positions of points in the refernce frame and the poses of current frame and reference frame and gives the optical flow in return.

computeMotionImages.m is a sample program that takes reference image, depth-map, pose file and gives a warped image obtained using optical flow.

|

C++ versions of parsing (NEW)

computeTpov_cam.cpp file reads the camera file (.txt format) and returns the SE3 pose of camera with respect to POVRay world.

Fill3Dpoints.cpp needs the K matrix of camera and reads the depth file and gives the [x, y, z] points in the camera coordinate reference frame. We simply used the K matrix we obtained from the MATLAB getcamK.m function and kept fixed for all frames.

float K[][] = { 481.20, 0, 319.50,

0, -480.00, 239.50,

0, 0, 1.00 };

Our coordinate system has Y-Axis pointing DOWNWARDS contrary to POVRay coordinate system that has pointing UPWARDS and hence the negative sign in front of K[1][1].

|

Extras (Trajectories for 3d reconstruction)

| Other Items Of Possible Interest |

StereoPOV: An unofficial patch for POVRay that gives stereo images for many cameras. We'd be adding stereo images for download on our website very soon.

VLPOV: Another unofficial patch from Andrea Vedaldi for POVRay we used to parse the POVRay files and obtain ground truth poses and depth.

CVPoV: CVPoV can be used to compute the optical flow, between two images given the depth-map and poses. We have that in our codebase too but just to mention that we built our code on this.

POVRay Documentation: POVRay documentation and other related stuff.

If you find our dataset helpful, please cite our ECCV paper.

@InProceedings{handa:etal:ECCV2012,

author = {A. Handa and R. A. Newcombe and A. Angeli and A. J. Davison},

title = "Real-Time Camera Tracking: When Is High Frame-Rate Best?",

booktitle = "Proc. of the European Conference on Computer Vision(ECCV)",

year = "2012",

month= "Oct.",

}

|

Please report any bugs and suggestions for improvement to

handa(dot)ankur(at)gmail(dot)com

|