Goal based agents

In life, in order to get things done we set goals for us to achieve, this pushes us to make the right decisions when we need to. A simple example would be the shopping list; our goal is to pick up every thing on that list. This makes it easier to decide if you need to choose between milk and orange juice because you can only afford one. As milk is a goal on our shopping list and the orange juice is not we chose the milk.

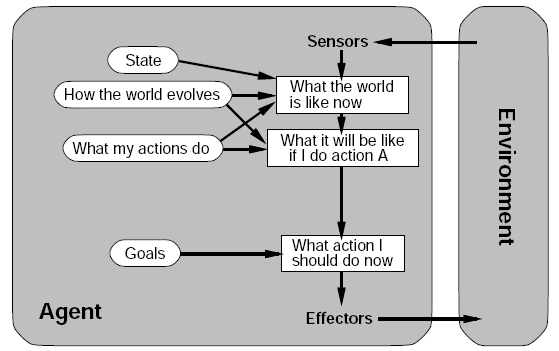

So in an intelligent agent having a set of goals with desirable situations are needed. The agent can use these goals with a set of actions and their predicted outcomes to see which action(s) achieve our goal(s).

Achieving the goals can take 1 action or many actions. Search and planning are two subfields in AI devoted to finding sequences of actions to achieve an agents goals.

Unlike the previous reflex agents before acting this agent reviews many actions and chooses the one which come closest to achieving its goals, whereas the reflex agents just have an automated response for certain situations.

Although the goal-based agent does a lot more work that the reflex agent this makes it much more flexible because the knowledge used for decision making is is represented explicitly and can be modified. For example if our mars Lander needed to get up a hill the agent can update it’s knowledge on how much power to put into the wheels to gain certain speeds, through this all relevant behaviors will now automatically follow the new knowledge on moving. However in a reflex agent many condition-action rules would have to be re-written.

Sources: (Artificial Intelligence: A Modern Approach by Stuart Russell and Peter Novig), Reference »