Unwitty burden of Model Context Protocol (MCP)

Since the start of this year, we’ve been getting a lot of buzz around Model Context Protocols and how they supercharge LLMs, followed by “this changes everything” hype. I thought I just drill down to the core elements of what it is, what it does and leave you to jduge (deliberate typo for the authenticity of the article) the rest.

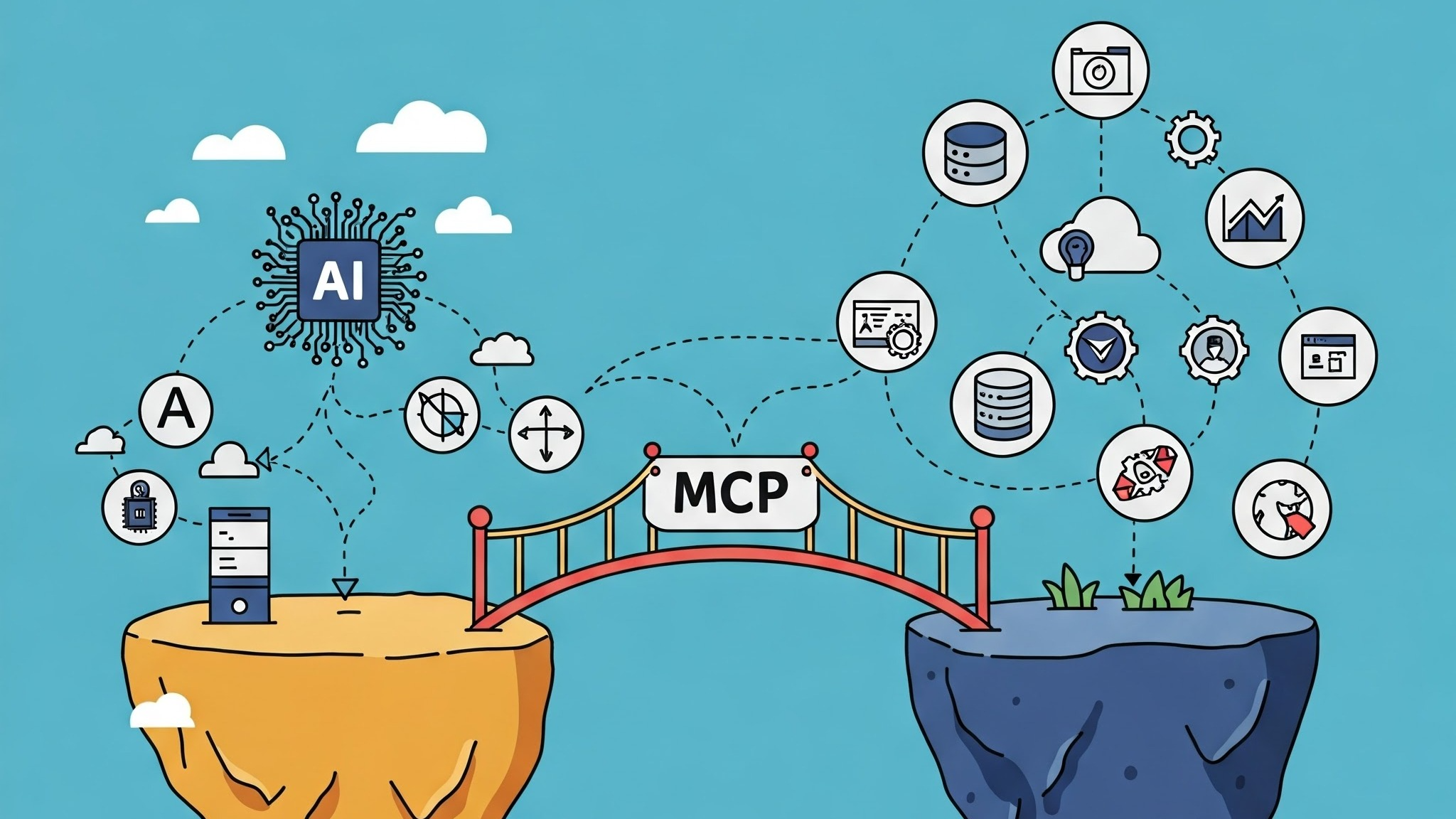

MCP is an open protocol that standardizes how applications provide context to LLMs.

It allows LLMs to “talk” to services like your filesystem or Spotify playlist to do things and get information. If this sounds familiar, this is because it is another incarnation of plugging things into a larger thing. Albeit, this time the larger thing is a capable language model that can hopefully make sense and use these smaller things. What are we plugging in? A MCP server provides three things to an LLM:

- Resources: File-like data that can be read by clients (like API responses or file contents). You can think of the most basic example as a weather service providing up-to-date weather information.

- Tools: Functions that can be called by the LLM (with user approval for now). The earliest and simplest tool in the LLM world has been a calculator because super intelligent AI agents are just as good at mental math as any old me who also needed a TI calculator.

- Prompts: Pre-written templates that help users accomplish specific tasks.This parts helps the developers engineer the best prompts to save you and the LLM time trying to figure out what works.

There is no magic here though: these components have to be implemented. While some of them can be automated using LLMs (along the lines of, here is the documentation, go make me a MCP server), implementing MCP servers more often than not requires manual intervention. The code looks like a glue between APIs of the downstream service and making the LLM understand in natural language what they are.

Here is an example for the classic weather alert “tool” taken from MCP documentation:

@mcp.tool()

async def get_alerts(state: str) -> str:

"""Get weather alerts for a US state.

Args:

state: Two-letter US state code (e.g. CA, NY)

"""

url = f"{NWS_API_BASE}/alerts/active/area/{state}"

data = await make_nws_request(url)

if not data or "features" not in data:

return "Unable to fetch alerts or no alerts found."

if not data["features"]:

return "No active alerts for this state."

alerts = [format_alert(feature) for feature in data["features"]]

return "\n---\n".join(alerts)

Even if you’re not familiar with Python code, you can roughly tell that it is fetching the alerts, checking for errors and formatting it back for the LLM to process. So where is the AI magic if any, and why do we need this?

Boatload of glue

The problem that’s slowly brewing behind the scenes is all these small snippets of code which are exposed to the LLM. Everything that our intelligent LLMs are able to do behind the scenes needs to be glued to something. The more complex and clever the service looks the more likely it will have to be implemented by humans.

flowchart LR

Host["Client (Claude, IDEs, Tools)"]

S1["MCP Server A"]

S2["MCP Server B"]

S3["MCP Server C"]

Host <-->|"MCP"| S1

Host <-->|"MCP"| S2

Host <-->|"MCP"| S3

S1 <--> D1[("Data Source A")]

S2 <--> D2[("Data Source B")]

subgraph "Internet"

S3 <-->|"Web APIs"| D3[("Remote Service")]

end

There are already hundreds of MCP services put together to allow LLMs to interact with almost everything you like:

- Databases and filesystems: use MCP servers for PostgreSQL, Google Drive and more

- Dev tools: the list is actually really long here but the usual expectations are fulfilled such as Git and GitHub.

- Web and search: you can let the LLM make web searches and fetch the web pages to you want.

- Misc: Spotify MCP server that allows your LLM to play, search for tracks and manage playlists.

The list has been growing rapidly partly because the bar to entry is very low. Anyone can make a connection and have an intelligent AI agent. It makes for a great marketing headline: “Gain 10x ROI with AI agent” written using snippets of Python 🤔

This proliferation of MCP servers reminds me of the rise of the REST APIs. The idea to create a common ground for letting programs communicate with each other is a very promising one. The actual REST API did something such as returning you the weather of a location from a database. While the MCP servers have some common grounds, particularly with GET and POST requests of the usual REST API for resources and tools respectively, most of them actually delegate instead.

How did we end up here?

Computers natively do not “speak” natural language. They run computer programs and need structured exchange of information. Most of our infrastructure, services and tools are built around lettings computers interact with other computers. Even reading this article requires many servers to coordinate, transfer data, your computer needs to interpret the incoming data and follow instructions to render the article.

On the other hand, the advent of language models created a natural language interface for computers. They mostly get what we try to say based on the vast training data. For simplicity, let’s say they work in the natural language land.

So we have a lot of services and computers built using structured information exchange protocols such as the Hypertext Transfer Protocol (HTTP) and ended up with a computer program that mimics natural language. How can we make the language model actually do useful stuff by talking to computers? We translate it for them of course! And that’s what an MCP server is all about…

Unwitty at best

“Giving AI agents tools” slogan at the moment is a poor engineer’s job, rather than an intelligent undertaking.

I hope this translation between services and natural language for a LLM to use is absolutely temporary. If we were to unlock the potential of what is possible, it seems to me that LLMs need to reason for themselves about what these services are, how to connect and use them. Spoon feeding all these services, even executed collectively as an open-source endeavour, is unlikely to yield a scaling long-term solution.

What is AI just writes it’s own MCP servers? This is a counter-argument raising question for the believers who suggest that one day LLMs will be powerful enough to just write connectors for anything they want and subsequently things will go out of control (e.g. financial data access, government AIs). Perhaps one day, but it doesn’t seem like that’s today.