I teach the Robotics Course in the Department of Computing, attended by third years and MSc students. This is a one term course which focuses on mobile robotics, and aims to cover the basic issues in this dynamic field via lectures and a large practical element where students work in groups with real robot kits. The course introduces algorithms for mobile robotics, with a "learn by doing" and "build robots and algorithms from scratch" philosophy.

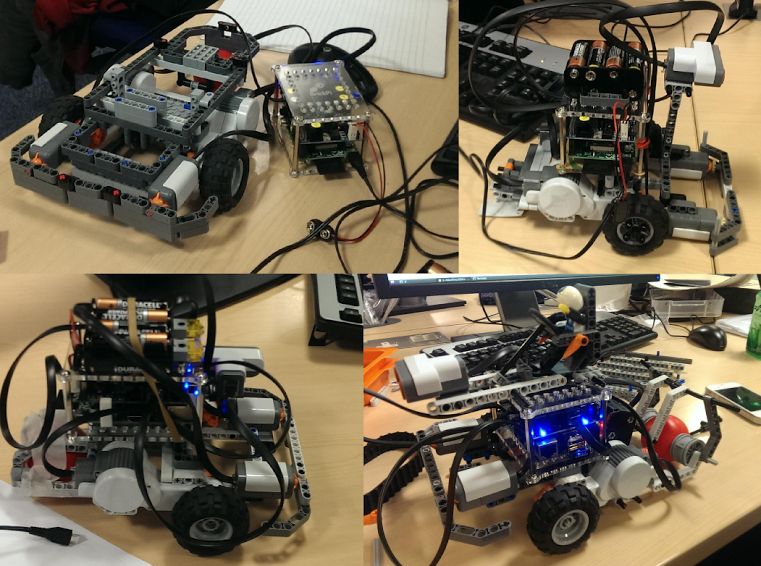

We will implement mobile robotics algorithms using Lego Mindstorms NXT parts, motors and sensors, controlled and programmed via Raspberry Pi single board Linux computers using BrickPi interface boards.

The course usually finishes with a competition where the groups compete to build and program the robot which can most effectively complete a certain challenge against the clock.

Thanks to Marwan Taher, Eric Dexheimer, Shinjeong Kim and Nicholas Fry who are the current lab teaching assistants and are another point of contact for any problem.

IMPORTANT: for the first week only (January 13th and 15th), there will be no practicals, and instead two hours of live lecture at 11am to 1pm on Tuesday 13th, and one hour of remote lecture at 10am-11am on Thursday 15th. The 9-10am slot on Thursday 15th will be empty.

For all other weeks of the course, we will have a single hour of face-to-face lecture, on Tuesday at 11am, in lecture room 311, Huxley Building. The other three hours will be a compulsory practical session, held in the teaching labs on level 2, Huxley Building.

All lectures will be recorded and uploaded soon after to PANOPTO to watch again in folder CO COMP60019 / COMP70084 - Robotics (Spring 2025-2026).

The full plan for lectures and tutorials will be kept up to date below, and I will announce any changes in lectures, by email and on EdStem.

Lecture slides and practical sheets for the course will be available from the links below (the links will come alive week by week throughout term, usually just before that week's live lecture, and you won't need to look at them before the lectures).

| Tuesday 11am | Tuesday 12pm | Thursday 9am | Thursday 10am | |

|

Week 1 Jan 13 and 15 |

Lecture (311) 1. Introduction to Robotics |

Lecture (311) 2. Robot Motion |

Lecture continued (remote) 2. Robot Motion |

|

|

Week 2 Jan 20 and 22 |

Lecture (311) 3. Sensors |

Practical (219) 1. Accurate Robot Motion (assessed) |

Practical continued (219) | Practical continued (219) |

|

Week 3 Jan 27 and Jan 29 |

Lecture (311) 4. Probabilistic Robotics |

Practical (219) 2. Sensors and Feedback Control |

Practical continued (219) Assessment of Practical 1 |

Practical continued (219) |

|

Week 4 Feb 3 and 5 |

Lecture (311) 5. Monte Carlo Localisation |

Practical (219) 3. Probabilistic Motion (assessed) |

Practical continued (219) | Practical continued (219) |

|

Week 5 Feb 10 and 12 |

Lecture (311) 6. Advanced Sonar Sensing |

Practical (219) 4. Monte Carlo Localisation (assessed) |

Practical continued (219) Assessment of Practical 3 |

Practical continued (219) |

|

Week 6 Feb 17 and 19 |

Lecture (311) 7. Camera Measurements |

Practical (219) 5. Planar Camera Calibration |

Practical continued (219) Assessment of Practical 4 |

Practical continued (219) |

|

Week 7 Feb 24 and 26 |

Lecture (311) 8. SLAM |

Practical (219) 6. Navigation Challenge (assessed) |

Practical continued (219) | Practical continued (219) |

|

Week 8 Mar 3 and 5 |

Lecture (311) Guest Lecture Dr Rob Deaves, Oxa |

Practical (219) Practical/Revision Questions? |

Practical (219) Navigation Challenge Competition |

Practical continued (219) |

For practicals, you will work in fixed groups throughout term. Each group will receive a Robotics kit in a plastic box, and the group will keep this kit and be responsible for it throughout term. Please organise yourselves into groups of 4 or 5 members. It is not crucial to be in a fixed team during the first lecture week of the course, but please settle on your group by the first practical on Tuesday Jan 20th.

Live practical sessions will take place in the teaching labs on level 2 in the Huxley Building and all students should attend to work in your groups. A large team of TAs and I will be present for all practical sessions to help with all of your questions.

There will be a new practical exercise set every week. These will be announced in lectures and a detailed practical sheet explaining what to do will be made available from the links in the schedule on this web page. You will be able to work on these exercises during the live practical sessions with the support of TAs, and use your own time outside of practical sessions to complete the exercises. General support outside of live sessions is available via EdStem.

Some practicals during term will be assessed. It will say clearly on the schedule and on each practical sheet whether the practical is assessed, and the mark scheme for assessment. The way we assess practicals is via a face to face discussion and live demonstration of your work with me or one of the teaching assistants in the labs. You will have one week to work on each of the assessed exercises, and the sessions when the assessments will happen are marked in the schedule above. The exact goals of each exercise and what you will have to demonstrate will be explained clearly in the practical sheets. These assessed practicals form the only coursework element of the Robotics course. No additional assessed exercises will be set and you will not need to submit any coursework documents or code. We will add up all marks from the assessed practicals throughout term to form a final coursework mark for each group. All members of a group will receive the same mark by default.

Robot Floor Cleaner Case Study: a tutorial we have used in previous years of Robotics to get students thinking about and discussing some of the capabilities of mobile robotic products. Questions and Answers.

Monte Carlo Localization: Efficient Position Estimation for Mobile Robots, Dieter Fox, Wolfram Burgard, Frank Dellaert, Sebastian Thrun. The original paper on MCL.

Rearrangement: A Challenge for Embodied AI, Dhruv Batra, Angel X. Chang, Sonia Chernova, Andrew J. Davison, Jia Deng, Vladlen Koltun, Sergey Levine, Jitendra Malik, Igor Mordatch, Roozbeh Mottaghi, Manolis Savva, Hao Su. An up-to-date discussion of the current challenges in robotics and embodied AI research, with a focus on research using simulation platforms (including Imperial's own RLBench, which is based on CoppeliaSim).

Thank you very much to Stefan Leutenegger, Duncan White, Geoff Bruce, LLoyd Kamara, Maria Valera-Espina, Riku Murai, Kirill Mazur, Aalok Patwardhan, Ignacio Alzuguray, Shikun Liu, Stephen James, Tristan Laidlow, Raluca Scona, Shuaifeng Zhi, Jan Czarnowski, Charlie Houseago, Binbin Xu, Zoe Landgraf, Hide Matsuki, Edgar Sucar, Joe Ortiz, Kentaro Wada, Andrea Nicastro, Robert Lukierski, Lukas Platinsky, Jan Jachnik, Jacek Zienkiewicz, Jindong Liu, Adrien Angeli, Ankur Handa and Simon Overell who helped enormously with the course in previous years; and to Ian Harries and Keith Clark who developed earlier material from which the current course has evolved. In particular Ian Harries deserves the credit for making this the practically-driven course it still is today.

The 2018 challenge was about accurate and fast localisation against a map, with robots starting in a randomly placed location and having to visit other waypoints as quickly as possible. The winning team of Qiulin Wang, Rui Zhou, Jianqiao Cheng and Chengzhi Shi had an extremely fast and efficient robot!

This year we had a path planning challenge, where the groups had to program their robots to use their sonar sensor to detect obstacles in a crowded area and then use a local planning approach to find a smooth but fast route across. Most groups followed the Dynamic Window Approach that I had shown in the lectures (and which you can try in simulation using this python code). This video shows the incredibly fast and smooth robot from the winning team of Alessandro Bonardi, Alberto Spina and Riku Murai. Thanks to Charlie Housego for the photo, video and commentary!

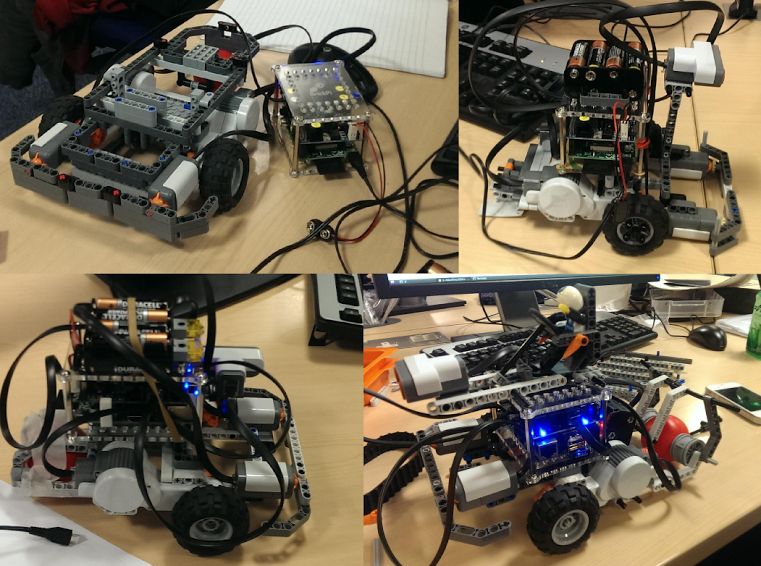

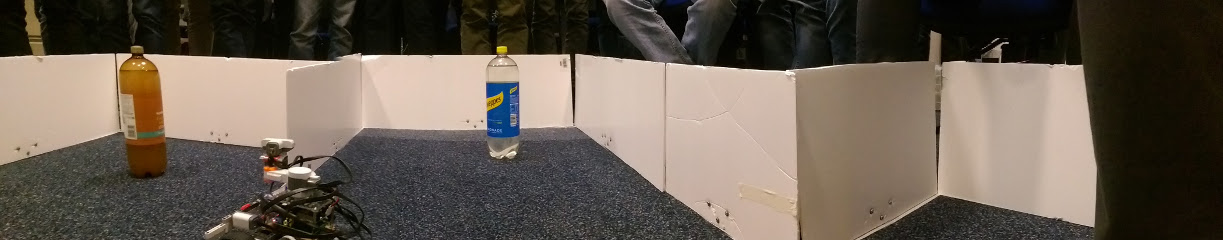

This year we tried quite a different challenge, but as ever with the emphasis on sensing, accurate localisation and speed. Three bottles were randomly placed within the course area which we have previously used for Monte Carlo Localisation, and the goal was to find them, proving this by bumping into each in turn, and then returning to the starting point within the course to prove that the robot was still localised. Several teams did very well, and the challenge was won by the team of Saurav Mitra, Andrew Li, Daniel Grumberg

and Mohammad Karamlou who had a very fast and innovative strategy.

This year we tried quite a different challenge, but as ever with the emphasis on sensing, accurate localisation and speed. Three bottles were randomly placed within the course area which we have previously used for Monte Carlo Localisation, and the goal was to find them, proving this by bumping into each in turn, and then returning to the starting point within the course to prove that the robot was still localised. Several teams did very well, and the challenge was won by the team of Saurav Mitra, Andrew Li, Daniel Grumberg

and Mohammad Karamlou who had a very fast and innovative strategy.

The winning robot was developed by Nicolas Paglieri, Clemens Lutz, Antonio Azevedo and Francesco Giovannini and completed the challenge in a remarkably fast 21 seconds, though impressively around half of the teams completed the whole course and a couple came close to this time. The winning team's robot used the Lego light sensors cleverly as proximity sensors, allowing giving the robot an extra obstacle sensor which was particularly useful at high speed, and this together with fast planning gave them the best time.

MTS

MTS

The winners' robot was remarkably precise, and its motion included particularly nice curved entries into the waypoint spaces, all while maintaining very good speed such that it beat its nearest competitor by 8 seconds. The members of the winning group were Alexandre Vicente, Ajay Lakshmanan, Garance Bruneau, Kevin Keraudren, Axel Bonnet and Zae Kim (video courtesy of Jindong Liu).

MP4

MP4

The winning team this time consisted of Jim Li, Daniel Abebe, Robert Kopaczyk, Nicholas Heung and Cheuk Tam, and their robot's successful completion of the course in under 40 seconds is shown below (video courtesy of the team).

Again there were several teams which achieved the challenge impressively within the target time of 30 seconds, but the winners by a narrow margin were Ivan Dryanovski, Tingting Li, Wenbin Li, Edmund Noon and Ke Wang whose winning run is shown in the video below (video courtesy of the team).

MPEG

MPEG

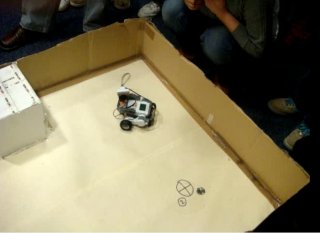

Several of the teams achieved good results, and one or two even made promising progress on the more difficult problem of global localisation (the "kidnapped robot problem"), where the robot had to initialise a localisation estimate from scratch when dropped at an arbitrary position in the course. This video shows the robot of the team of David Passingham, Vincent Dedoyard, John Payce and Mengru Li in action (video courtesy of the team).

The winning robot was from the team of Philip Stennett, Nicholas Ball, Maurice Adler and Wei Chieh Soon which completed the course all three times with a total time of 36.9 seconds --- this is a (very dark) video of their robot in action . The robots from the team of Si Yu Li, Henry Arnold, Shobhit Srivastava and Jonathan Dorling, and the team of Ricky Shrestha, Hussein Elgridly and Maxim Zverev also successfully completed the course three times.